先开始创建表

create 'emp001','member_id','address','info'

放入数据

put 'emp001','Rain','id','31'

put 'emp001', 'Rain', 'info:birthday', '1990-05-01'

put 'emp001', 'Rain', 'info:industry', 'architect'

put 'emp001', 'Rain', 'info:city', 'ShenZhen'

put 'emp001', 'Rain', 'info:country', 'China'

get 'emp001','Rain','info'

scan 'emp001',{COLUMNS=> 'info:birthday'}

导入依赖

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>1.3.1</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>1.3.1</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.4.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<transformers>

<transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass>com.www</mainClass>

</transformer>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

创建表

package com.dd12345.aaa;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.HBaseAdmin;

public class CreateTable {

public static void main(String []args) throws Exception {

Configuration conf=new Configuration();

conf.set("hbase.rootdir", "hdfs://locslhost:8020/hbase");

HBaseAdmin client=new HBaseAdmin(conf);

HTableDescriptor htd = new HTableDescriptor(TableName.valueOf("student"));

HColumnDescriptor h1=new HColumnDescriptor("info");

HColumnDescriptor h2=new HColumnDescriptor("grade");

htd.addFamily(h1);

htd.addFamily(h2);

client.createTable(htd);

client.close();

}

}

插入一条数据

package com.dd12345.aaa;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.HBaseAdmin;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.util.Bytes;

public class insertOne {

public static void main(String []args) throws Exception {

Configuration conf=new Configuration();

conf.set("hbase.rootdir", "hdfs://locslhost:8020/hbase");

HTable table=new HTable(conf,"student");

Put put =new Put(Bytes.toBytes("stu001"));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("name"), Bytes.toBytes("Tom"));

table.put(put);

table.close();

}

}

查找数据

package com.dd12345.aaa;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.util.Bytes;

public class findUp {

public static void main(String []args) throws Exception {

Configuration conf=new Configuration();

conf.set("hbase.rootdir", "hdfs://locslhost:8020/hbase");

HTable table=new HTable(conf,"student");

Get get =new Get(Bytes.toBytes("stu001"));

Result record=table.get(get);

String name=Bytes.toString(record.getValue(Bytes.toBytes("info"), Bytes.toBytes("name")));

System.out.println(name);

table.close();

}

}

扫描数据

package com.dd12345.aaa;

import org.apache.hadoop.conf.Configuration;

public class Scann {

public static void main(String []args) throws Exception {

Configuration conf=new Configuration();

conf.set("hbase.rootdir", "hdfs://locslhost:8020/hbase");

HTable table=new HTable(conf,"student");

Scan scanner=new Scan();

ResultScanner rs=table.getScanner(scanner);

for(Result r:rs) {

String name=Bytes.toString(r.getValue(Bytes.toBytes("info"), Bytes.toBytes("name")));

String age=Bytes.toString(r.getValue(Bytes.toBytes("info"), Bytes.toBytes("age")));

System.out.println(name+" "+age);

}

table.close();

}

}

删除表

package com.dd12345.aaa;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.HBaseAdmin;

public class delete {

public static void main(String []args) throws Exception {

Configuration conf=new Configuration();

conf.set("hbase.rootdir", "hdfs://locslhost:8020/hbase");

HBaseAdmin client=new HBaseAdmin(conf);

client.disableTable("student");

client.deleteTable("student");;

client.close();

}

}

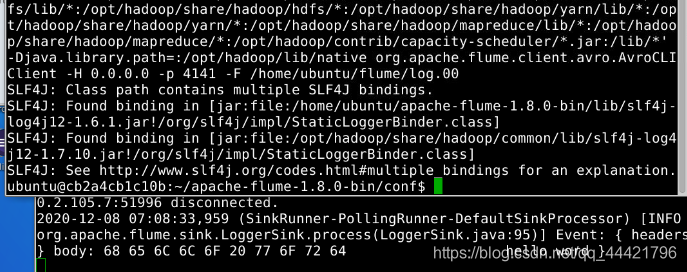

成功截图

有问题私聊我!