详细记录Linux服务器搭建Hadoop3.X完全分布式集群环境

| Hadoop节点 | NameNode | Secondary NameNode | DataNode | Resource Manager | NodeManager |

|---|---|---|---|---|---|

| node001 | * | * | * | * | |

| node002 | * | * | * | ||

| node003 | * | * |

下载Hadoop

下载地址:https://archive.apache.org/dist/hadoop/core/

cd /usr/local/program

wget https://archive.apache.org/dist/hadoop/core/hadoop-3.1.3/hadoop-3.1.3.tar.gz

tar -zxvf hadoop-3.1.3.tar.gz

mv hadoop-3.1.3 hadoop

修改集群环境

vim hadoop-env.sh

export JAVA_HOME=/usr/local/program/jdk8

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_ZKFC_USER=root

export HDFS_JOURNALNODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

修改配置文件

vim core-site.xml: 定义系统级别的参数

<configuration>

<!-- 声明hdfs文件系统 指定某个ip地址,在ha模式中指定hdfs集群的逻辑名称 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://node001:9000</value>

</property>

<!-- 声明hadoop工作目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/program/hadoop/datas/tmp</value>

</property>

<!-- 界面访问数据使用的用户名 默认的用户权限很小,能保证数据安全 高权限的用户能看到其它用户数据-->

<property>

<name>hhadoop.http.staticuser.user</name>

<value>root</value>

</property>

</configuration>

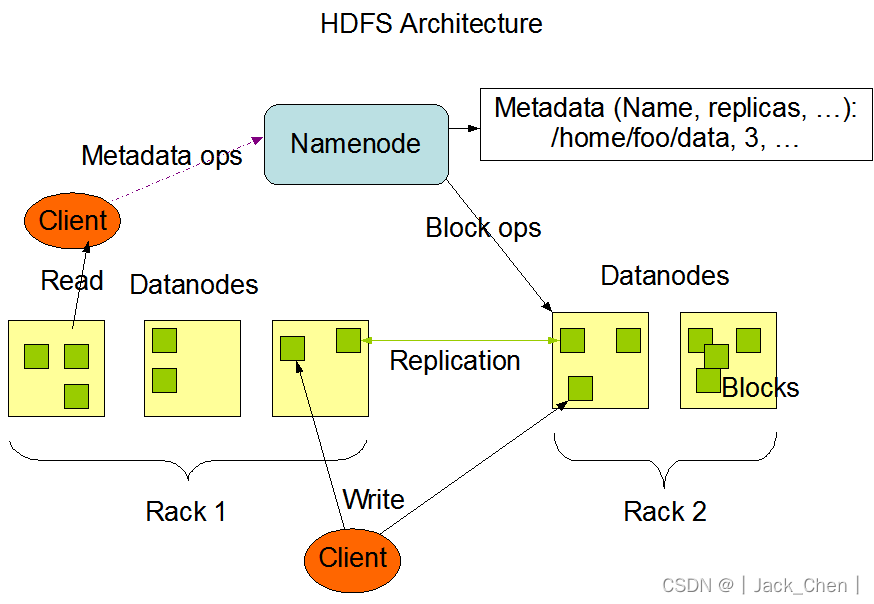

vim hdfs-site.xml: 对HDFS的相关设置

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node002:9871</value>

</property>

<property>

<name>dfs.namenode.secondary.https-address</name>

<value>node003:9872</value>

</property>

<!-- 副本数量,默认值3个 -->

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<!-- namenode数据存放路径,元数据 -->

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///usr/local/program/hadoop/datas/namenode</value>

</property>

<!-- datanode数据存放路径,block块 -->

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///usr/local/program/hadoop/datas/datanode</value>

</property>

</configuration>

vim workers: 指定某几个节点作为数据节点

node001

node002

node003

vim mapred-site.xml

<configuration>

<!-- 指定mapreduce框架为yarn方式 -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- 指定mapreduce jobhistory地址 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>node001:10020</value>

</property>

<!-- 任务历史服务器的web地址 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>node001:19888</value>

</property>

<!-- 配置运行过的日志存放在hdfs上的存放路径 -->

<property>

<name>mapreduce.jobhistory.done-dir</name>

<value>/history/done</value>

</property>

<!-- 配置正在运行中的日志在hdfs上的存放路径 -->

<property>

<name>mapreudce.jobhistory.intermediate.done-dir</name>

<value>/history/done/done_intermediate</value>

</property>

<!--以下必须配置,否则运行MapReduce会提示检查是否配置-->

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/program/hadoop</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/program/hadoop</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/program/hadoop</value>

</property>

</configuration>

vim yarn-site.xml

<configuration>

<!-- 指定RM的地址 -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>node001</value>

</property>

<!-- NodeManager上运行的附属服务 -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

修改环境变量

vim /etc/profile

export HADOOP_HOME=/usr/local/program/hadoop

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

将环境变量配置同步到其他节点

scp /etc/profile node002:/etc/profile

scp /etc/profile node003:/etc/profile

重新加载三台服务器的环境变量配置

source /etc/profile

分发软件到其他节点

scp -r hadoop node002:`pwd`

scp -r hadoop node003:/usr/local/program

格式化NameNode

在NameNode节点(node001)进行格式化

hdfs namenode -format

2022-03-20 19:21:18,897 INFO util.GSet: 0.25% max memory 810.8 MB = 2.0 MB

2022-03-20 19:21:18,897 INFO util.GSet: capacity = 2^19 = 524288 entries

2022-03-20 19:21:18,904 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

2022-03-20 19:21:18,904 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

2022-03-20 19:21:18,904 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

2022-03-20 19:21:18,907 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

2022-03-20 19:21:18,907 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

2022-03-20 19:21:18,908 INFO util.GSet: Computing capacity for map NameNodeRetryCache

2022-03-20 19:21:18,908 INFO util.GSet: VM type = 32-bit

2022-03-20 19:21:18,908 INFO util.GSet: 0.029999999329447746% max memory 810.8 MB = 249.1 KB

2022-03-20 19:21:18,908 INFO util.GSet: capacity = 2^16 = 65536 entries

2022-03-20 19:21:18,926 INFO namenode.FSImage: Allocated new BlockPoolId: BP-544803462-172.29.234.1-1647775278921

2022-03-20 19:21:18,936 INFO common.Storage: Storage directory /usr/local/program/hadoop/datas/namenode has been successfully formatted.

2022-03-20 19:21:18,957 INFO namenode.FSImageFormatProtobuf: Saving image file /usr/local/program/hadoop/datas/namenode/current/fsimage.ckpt_0000000000000000000 using no compression

2022-03-20 19:21:19,033 INFO namenode.FSImageFormatProtobuf: Image file /usr/local/program/hadoop/datas/namenode/current/fsimage.ckpt_0000000000000000000 of size 391 bytes saved in 0 seconds .

2022-03-20 19:21:19,044 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2022-03-20 19:21:19,048 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid = 0 when meet shutdown.

2022-03-20 19:21:19,048 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at node001/172.29.234.1

************************************************************/

启动HDFS

# 启动集群

start-dfs.sh

# 关闭集群

stop-dfs.sh

[root@node001 ~]# start-dfs.sh

Starting namenodes on [node001]

上一次登录:日 3月 20 19:36:51 CST 2022从 39.144.138.187pts/1 上

Starting datanodes

上一次登录:日 3月 20 19:36:56 CST 2022pts/0 上

node002: bash: /usr/local/program/hadoop/bin/hdfs: No such file or directory

node003: WARNING: /usr/local/program/hadoop/logs does not exist. Creating.

Starting secondary namenodes [node002]

上一次登录:日 3月 20 19:36:58 CST 2022pts/0 上

node002: bash: /usr/local/program/hadoop/bin/hdfs: No such file or directory

[root@node001 ~]# jps

5780 DataNode

6294 Jps

5581 NameNode

[root@node002 hadoop]# jps

28467 Jps

28057 DataNode

28284 SecondaryNameNode

[root@node003 ~]# jps

26387 DataNode

30106 Jps

yarn_239">启动yarn

start-yarn.sh

stop-yarn.sh

[root@node001 hadoop]# start-yarn.sh

Starting resourcemanager

Last login: Sat Apr 16 18:32:17 CST 2022 on pts/0

Starting nodemanagers

Last login: Sat Apr 16 18:37:20 CST 2022 on pts/0

[root@node001 hadoop]# jps

7536 DataNode

9510 ResourceManager

7369 NameNode

9676 NodeManager

10063 Jps

[root@node002 program]# jps

13328 NodeManager

12883 SecondaryNameNode

12773 DataNode

13462 Jps

[root@node003 program]# jps

4145 Jps

4006 NodeManager

3726 DataNode

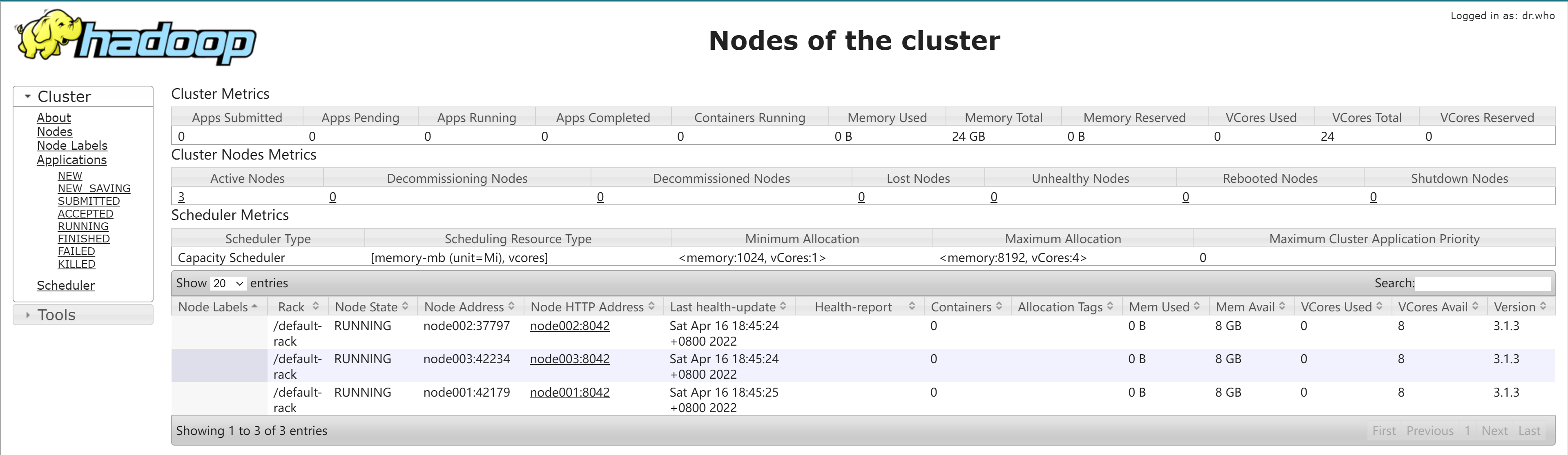

测试集群

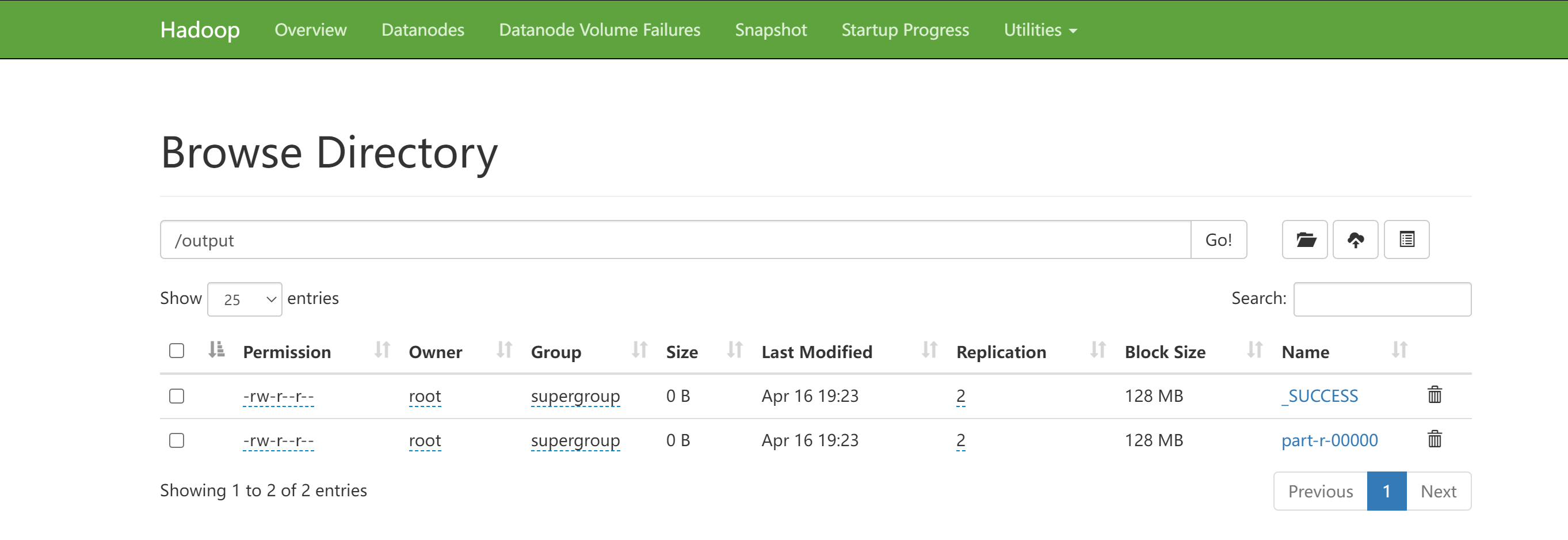

1.HDFS界面

访问NameNode节点IP:9870

命令操作测试

[root@node001 ~]# hdfs dfs -mkdir -p /test

[root@node001 ~]# hdfs dfs -put nginx.yaml /

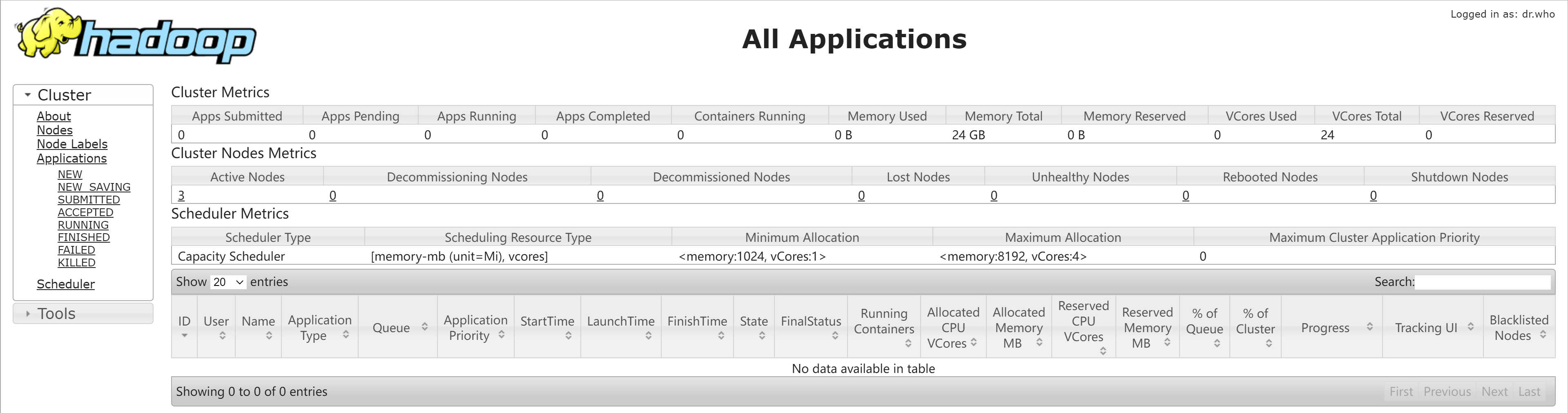

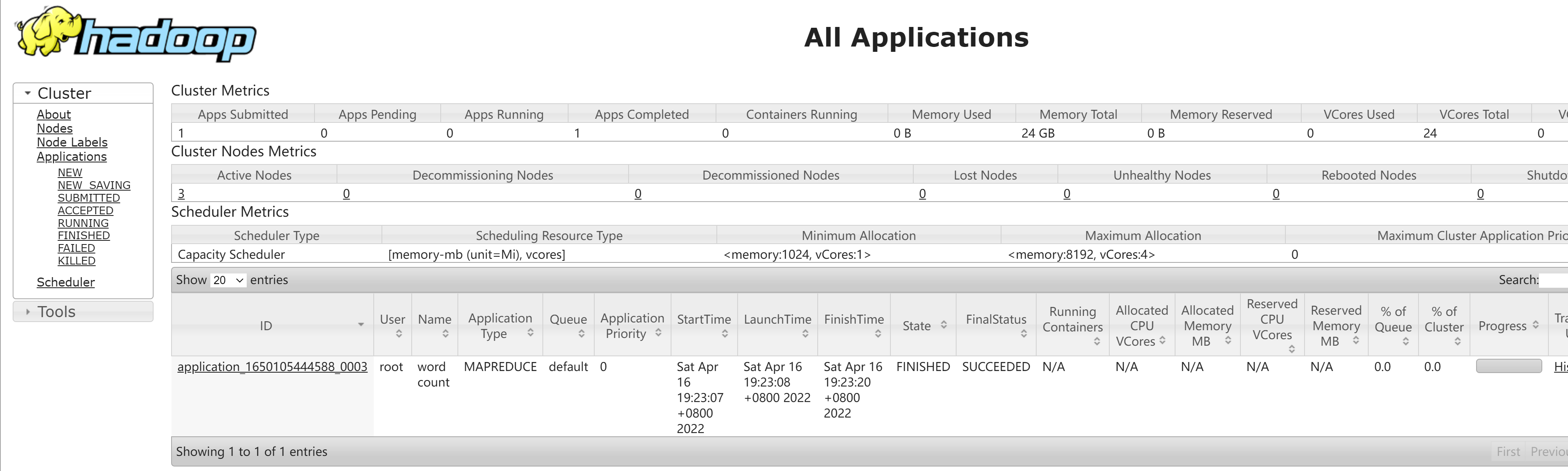

2.YARN界面

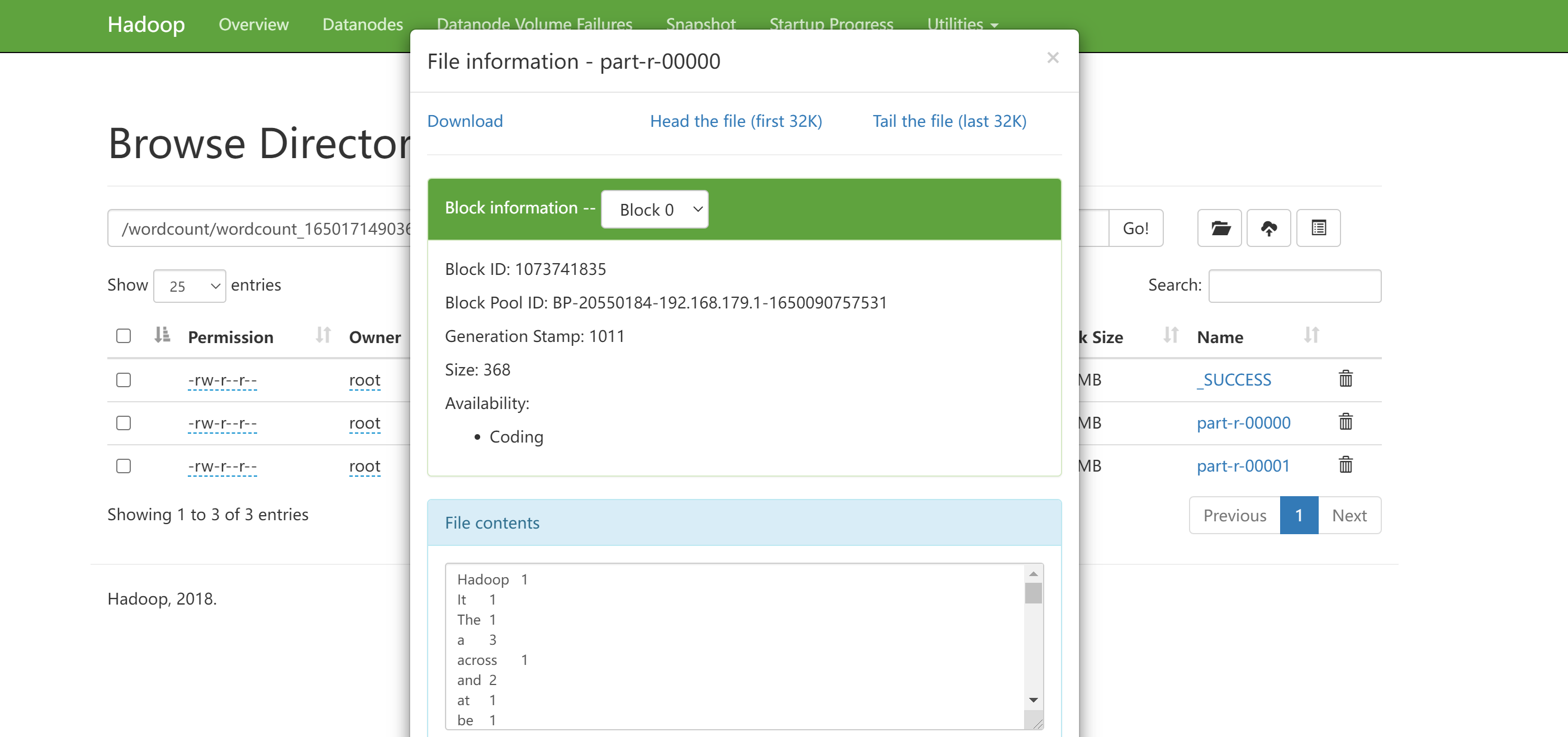

作业测试

[root@node001 hadoop]# hdfs dfs -mkdir /input

[root@node001 hadoop]# hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount /input /output

Java HotSpot(TM) Server VM warning: You have loaded library /usr/local/program/hadoop/lib/native/libhadoop.so.1.0.0 which might have disabled stack guard. The VM will try to fix the stack guard now.

It's highly recommended that you fix the library with 'execstack -c <libfile>', or link it with '-z noexecstack'.

2022-04-16 19:23:05,698 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2022-04-16 19:23:06,343 INFO client.RMProxy: Connecting to ResourceManager at node001/172.29.234.1:8032

2022-04-16 19:23:06,793 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/root/.staging/job_1650105444588_0003

2022-04-16 19:23:06,937 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2022-04-16 19:23:07,067 INFO input.FileInputFormat: Total input files to process : 0

2022-04-16 19:23:07,078 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2022-04-16 19:23:07,147 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2022-04-16 19:23:07,159 INFO mapreduce.JobSubmitter: number of splits:0

2022-04-16 19:23:07,270 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2022-04-16 19:23:07,689 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1650105444588_0003

2022-04-16 19:23:07,689 INFO mapreduce.JobSubmitter: Executing with tokens: []

2022-04-16 19:23:07,848 INFO conf.Configuration: resource-types.xml not found

2022-04-16 19:23:07,849 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

2022-04-16 19:23:08,251 INFO impl.YarnClientImpl: Submitted application application_1650105444588_0003

2022-04-16 19:23:08,296 INFO mapreduce.Job: The url to track the job: http://node001:8088/proxy/application_1650105444588_0003/

2022-04-16 19:23:08,296 INFO mapreduce.Job: Running job: job_1650105444588_0003

2022-04-16 19:23:15,396 INFO mapreduce.Job: Job job_1650105444588_0003 running in uber mode : false

2022-04-16 19:23:15,397 INFO mapreduce.Job: map 0% reduce 0%

2022-04-16 19:23:21,470 INFO mapreduce.Job: map 0% reduce 100%

2022-04-16 19:23:22,499 INFO mapreduce.Job: Job job_1650105444588_0003 completed successfully

2022-04-16 19:23:22,584 INFO mapreduce.Job: Counters: 40

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=217846

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=0

HDFS: Number of bytes written=0

HDFS: Number of read operations=5

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched reduce tasks=1

Total time spent by all maps in occupied slots (ms)=0

Total time spent by all reduces in occupied slots (ms)=3397

Total time spent by all reduce tasks (ms)=3397

Total vcore-milliseconds taken by all reduce tasks=3397

Total megabyte-milliseconds taken by all reduce tasks=3478528

Map-Reduce Framework

Combine input records=0

Combine output records=0

Reduce input groups=0

Reduce shuffle bytes=0

Reduce input records=0

Reduce output records=0

Spilled Records=0

Shuffled Maps =0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=61

CPU time spent (ms)=240

Physical memory (bytes) snapshot=150511616

Virtual memory (bytes) snapshot=1358278656

Total committed heap usage (bytes)=168558592

Peak Reduce Physical memory (bytes)=150511616

Peak Reduce Virtual memory (bytes)=1358278656

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Output Format Counters

Bytes Written=0

在YARN Web界面查看

在HDSF界面查看